In the previous two months, we've looked at Darktable and digiKam as open source photo management and editing suites. A third open source photographer's suite, called LightZone, has been around since 2005 as a closed source application, but got open sourced when its parent company dissolved in 2011. As a result, LightZone is now free and open source software for high-end photo editing and management. LightZone is written mostly in Java, although it uses some external libraries for certain image formats; even so, it's ardently cross-platform and very powerful.

Java dependencies

Being based mostly on Java, LightZone assumes a fairly robust Java environment. For an operating system to pre-install Java is becoming rare, so if you don't use many Java applications on your system, you may not have all the Java packages LightZone expects to find. It's down to what Java kit you do install, so for the quickest and easiest results, you can install the full JDK. That should have everything you need.

If you prefer to micro-manage, you can install OpenJDK or, if you prefer, IcedTea, but if you take this route, extra Java classes may end up being be required.

If you try to launch LightZone and it complains about missing Java classes, search for the error online and install the Java component that is said to resolve the missing class. I only encountered errors when installing Java piecemeal; specifically, I hit a java.lang.NoClassDefFoundError: javax/help/HelpSetException error that seems to be fairly common, but an online search and an install of the javahelp2 package fixed the issue.

Installing LightZone

LightZone can be downloaded from lightzoneproject.org, although you must register with the website before reaching a download link.

On Linux, you can install LightZone in two different ways. You can either install it to your filesystem using checkinstall or you can just run it from your user directory.

To install with checkinstall:

$ mkdir lightzone-4.1.1

$ tar xvf lightzone*4*xz -C lightzone-4.1.1

$ cd lightzone-4.1.1

$ sudo checkinstallThis builds and installs a .deb, .rpm, or .tgz package (depending on what's appropriate for your distribution). You can manage the package as usual using your system's packaging tools (dpkg, rpm, pkgtools).

To run from a local directory:

$ mkdir -p $HOME/bin/lightzone4

$ tar xvf lightzone*4*xz -C lightzone4

$ cd lightzone4

$ sed -i 's|usrdir=/usr|usrdir=$HOME/bin/lightzone4/usr|' ./usr/bin/lightzone

$ ln $HOME/bin/lightzone/usr/bin/lightzone4 -s $HOME/bin/lightzoneTo launch from the local directory, run $HOME/bin/lightzone from a shell, or modify the lightzone.desktop file to run that command for you.

LightZone basics

LightZone, like Darktable and a few closed source competitors, is designed to be a photography workflow application. LightZone is not trying to replace a photo compositor, such as GIMP; it's trying to make your photos easy to find, sort, and re-touch. An ideal user is anyone who takes lots of photos—such as a wedding photographer, a studio photographer, or any average tourist with a cell phone—and needs to ingest hundreds or thousands of shots, sort through 5 or 10 versions of essentially the same subject, choose the best one of the bunch, touch up any imperfections, and publish the results.

When you first launch LightZone, it starts in Browse mode. At first, your workspace is empty, so you can choose a folder containing images from the file tree on the left. The moment you select a directory with images in it, the images are loaded into the LightZone browser: thumbnails on the bottom third of the window, large preview on top.

Lightzone browse mode

To view and work on RAW photos, you must have DCRaw libraries installed (just as for TIFF support, you need tiff libraries, and so on).

The thumbnail browser at the bottom of the window provides most of the functions you'd expect from a photo thumbnail viewer. Using the browser toolbar, you can adjust thumbnail size, rotate images, sort by file name, file size, or even metadata, such as your own rating, capture time, focal length, or aperture setting. Right-clicking on any thumbnail reveals even more actions, including the ability to rename, convert, and print the image.

A single-click on any thumbnail makes it the active selection. A control-click on two images brings both images up in the viewer, side by side, for easy comparison. You can view several images in your browser at a time (up to five on my display; after that, you may as well adjust the size of the thumbnails and view the photos that way).

Each preview image that appears in the top panel has an Edit button overlaid in the bottom right corner. To edit an image, click Edit on the photo, or click the Edit button in the upper left corner of the LightZone window to bring the active selection into the edit view.

Edit

In the edit (what you might think of as the "digital darkroom") view, the main areas of interest are:

- The left and right side panels hold presets and filter palettes. These are what you'll use to apply effects to your photograph.

- The center panel displays your image.

If you feel you need more room to work, hide panels using the vertical tabs on the left and right of the LightZone window.

Your workflow will probably start with the panel on the right. Underneath the Zones panel, there is a horizontal list of available filters. Any filter placed on a photograph appears in the filter stack underneath the filter list.

Filters

The filters in LightZone are, to me, a perfect mix of simple but must-have effects similar to those found in digiKam and Darktable, plus a few surprisingly powerful tools rivaling functions found in the likes of GIMP. For the former group, LightZone offers a zone mapper—like a levels filter, but from a more Ansel Adams perspective—hue and saturation control, sharpen and blur, white balance, color balance, and noise reduction. Each filter has a well-documented entry in LightZone's inbuilt user guide, and because they're non-destructive, you can try them without actually affecting your original photo.

Something that sets LightZone apart is that each of those "standard" filters also has a selection- and color-based constraint system, so any filter you apply can be done on only part of your image. Other applications can do that, but the fact that LightZone builds the option in on every default is a real time-saving convenience.

LightZone filters

Another little surprise that LightZone ships with is the clone filter. By default, the clone filter clones an image and overlays it onto itself. This is great for interesting compositing effects, but it's even better once you clone parts of an image. Now you're compositing, just as you might in GIMP.

Presets

Each filter can have a preset—a temporary snapshot of a setting that you want to keep on hand, which is sort of a reverse-undo function. If you adjust a filter and get a result that you like, for example, you can create a preset by right-clicking on the filter icon in the filter's toolbar and selecting Remember Asset.

Then, imagine that you adjust the filter again but end up with something less appealing that you had before. You want to undo your changes, but you've been through a few iterations of each slider in the filter, so what would you undo? Of course, you wouldn't; instead, right-click on the filter icon in the filter's toolbar and select Apply Preset and all of your settings are set back to when you took the preset snapshot.

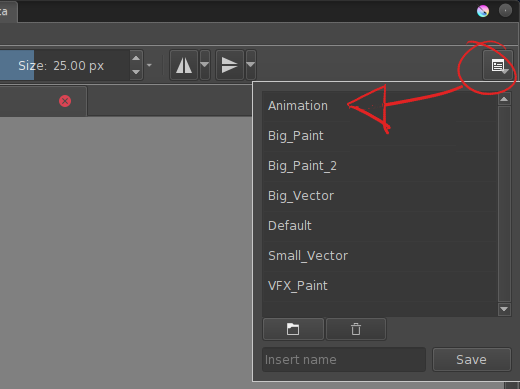

Styles

More complex than filters, and far more permanent, are Style. Whereas a preset lasts for only as long until you overwrite it with a more recent snapshot, a style gets saved to your LightZone configuration forever. Styles are also broader in scope; instead of just taking a snapshot of one filter's settings, a style can contain a whole stack of filters.

Preset styles are available in the left side panel. If the preset style options are not visible, click the Styles vertical tab to reveal it.

Hovering over a style displays your photo in the upper left corner, with that filter applied. Double-click the style name to apply the filters it contains to your photograph. Be warned, this adds filters to any filters you have already applied up to this point, so be sure to start with a clean slate if you want to see just the style's effect.

LightZone Styles

Any combination of filters can be saved as a Style with the Add a new Style button at the top of the window. By default, this gathers all current filters and settings from your currently active image and wraps them in a style.

Compare the contrast

To "flip back" to your original image as you edit, click and hold the Orig button in the top toolbar. Release to get back to your edit in progress.

History

The history palette on the left is an undo stack that persists while you work. When you leave Edit mode, your history goes away.

History

Saving images

To save an image, click the Done button in the top toolbar. This option never overwrites your original photo; it saves a new version, differentiated by inserting lvn in the filename. The format that LightZone saves to is determined by your LightZone preferences.

Preferences

Go to the Edit menu and select Preferences to configure LightZone's default behavior. There are three tabs in the Preferences window:

- General: Set how much RAM LightZone can use, the location of its scratch folder, the color profile of your display, and more.

- Save: Default file format of saved (exported) images and associated options (compression levels, bit depth, ppi, and so on).

- Copyright: The copyright and copyleft message to embed into exported images.

Preferences

LightZone

LightZone is a capable and "prosumer"-level application—it's not quite as feature-rich as Darktable or digiKam, but it's not quite as simple as not as simplistic as something like FSpot. Being Java-based, LightZone is easy to install on all platform, ensuring a consistent workflow across all users. And the results speak for themselves.

Try LightZone out and let me know what you think of it.

CC-BY-SA 4.0

Before.

Before. After.

After. Xaos in pseudo-3D mode.

Xaos in pseudo-3D mode.

Click on, click off.

Click on, click off.

But I beg to differ. The

But I beg to differ. The